The EU AI Act, also known as the Artificial Intelligence Act, is the world’s first comprehensive piece of legislation directly governing artificial intelligence (AI). It comes into effect in 2025 and brings with it strict requirements.

Its objective is to ensure the trustworthy and responsible development and use of AI systems across Europe. It is important to note that the Act does not seek to create barriers to AI, but rather to regulate its use and therefore should not deter organizations from embracing it.

The AI Act essentially breaks down applications of AI into four major risk categories: unacceptable, high, moderate, and minimal. These categories generally correspond to the sensitivity of the data involved and the particular AI use case or application. AI practices that pose an unacceptable risk are subject to the greatest penalties and may expose companies to fines of €35 million, or 7 percent of a company’s annual revenue, whichever is greater.

As AI is set to influence so many parts of life in the modern world, there is a strong possibility that the EU AI Act could become a global standard, determining to what extent AI can interact with everyday life.

One important element is that it seeks to regulate AI apps marketed and used within the EU (including free-to-use apps) [Article 2] and specifically requires human oversight for large decision-making [Article 14]. Similar to General Data Protection Regulation (GDPR), the AI Act will reach beyond the EU’s borders, applying to organizations based outside of the EU if they target EU-based individuals. Systems already on the market will have a period of time in which to achieve compliance.

The Act gives users more rights in relation to how data is treated, specifically around complaints and transparency requirements. This is the most expansive category. It likely includes many of the types of systems being utilized today, including biometric identification, educational, recruitment systems, financial evaluations and insurance-related systems. Similar to GDPR, systems processing data that could significantly impact the fundamental rights and freedoms of individuals will come under higher scrutiny.

Just like the GDPR requirement, the AI Act requires organizations to pre-consult with the regulator and/or carry out an impact assessment in cases of high-risk systems. This is called a fundamental rights impact assessment and is required by some (not all) deployers—depending of course on the sensitivity. Again, similar to how data subjects have a right to opt out of automatic processing under GDPR, the AI Act requires that systems’ use must be overseen by people—human beings—to help prevent potentially harmful outcomes.

The Act requires that high-risk AI systems are designed and developed to ensure that their operation is sufficiently transparent to enable users to interpret the output and use it appropriately. This documentation will form an integral part of the risk management compliance program in large organizations. Therefore a first step of understanding the impact of the forthcoming legislation is to identify all AI systems and assess their risk level. This inventory-taking will further enable organizations to develop mitigation strategies to allow the continued use or commercialization of AI systems in the EU market.

These inventories should tie nicely into a tiered compliance framework, which may be completed with audits or technical measures to comply with AI transparency and explainability requirements. Again, this should reflect the Article 32 technical and organizational measures that are required by GDPR. We can think of this as adding an extra layer on top of an already existing compliance foundation for measuring and managing data procession and protection of users.

All organizations, regardless of whether they develop AI systems, would be well advised to integrate policies and procedures around the use of AI. Organizations may be able to rely on GDPR’s data protection impact assessment (DPIA), which should already include transparency and fairness.

For developers, this should address the requirements of explainability and non-discrimination in automated decision-making. For user organizations (i.e. an organization not based in the EU but serving EU people and organizations), this should address the requirement to ensure that administrators can use the system in accordance with documentation, but also with a curated approach to systems use.

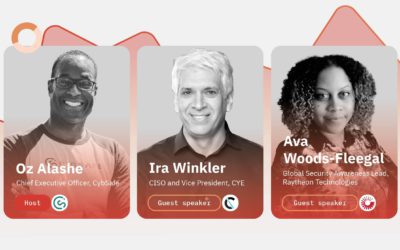

Another universal step is the introduction of training and awareness around the implications of the Act. This is particularly crucial to meet the Act’s important requirement of human oversight to prevent irresponsible decision-making, which could significantly affect the fundamental rights and freedoms of individuals.

How is this relevant to human risk management within organizations? From the perspective of measuring and managing human cyber risk, the analysis and use of AI systems must not negatively impact the fundamental rights of individuals. In other words, the organization’s security awareness professional(s) must have oversight of the results of such systems.

It will be essential to roll out employee training and embed AI awareness in the organization. AI literacy will be key here, done through the human risk management program and overseen by the CISO or relevant security team. Raising awareness among colleagues about the Act and its implications for their role will ensure that the oversight element is sufficiently supported. It will also allow organizations that do not specialize in software development to understand where higher-risk systems may form part of their infrastructure.

Companies using these AI tools and ‘foundation models’ to deliver their services must consider and manage risks accordingly and comply with transparency obligations set out in the Act. The EU AI Act aims to set the standard in AI safety, ethics, and responsible AI use, and also mandates transparency and accountability. The EU AI Act further sets out obligations that address the use of Generative AI (GenAI). Where those admin users believe that the risk is elevated, they will have a responsibility to inform the provider and suspend use.

GenAI, such as ChatGPT, will not be classified as high-risk but will have to comply with transparency requirements and EU copyright law by disclosing that the content was generated by AI and by designing the model to prevent it from generating illegal content. Additionally, users of AI systems will be obliged to use it in accordance with the instructions, which will require appropriate training in those same systems.

In summary, the Act will be instrumental in supporting AI-driven innovation in Europe. This will invariably accelerate the generation of new ideas, develop them further, and improve decision-making, resulting in a net positive effect for organizations. Developing an environment in which users can trust the positive benefits of what AI has to offer will further enhance positive developments of AI in business.

In addition to the general benefits of classification, compliance, and governance, the structure applied in the EU should be at the forefront of embedding trust in systems and reinforcing users’ trust in AI as something that can be harnessed for good, without undermining their fundamental human rights and freedoms.