There is a big, hairy elephant in the room when it comes to phishing:

Many organisations believe that it’s okay, or right, or that they have no choice other than to punish people who repeatedly fail phishing simulations.

Are they right?

Before we answer that, let’s remind ourselves exactly what phishing is.

What is phishing?

According to the NCSC, phishing…

“describes a type of social engineering where attackers influence users to do ‘the wrong thing’, such as disclosing information or clicking a bad link. Phishing can be conducted via a text message, social media, or by phone, but these days most people use the term ‘phishing’ to describe attacks that arrive by email.”

Phishing works because it exploits people’s inherent social instincts, such as being helpful and efficient. Phishing attacks can be particularly powerful because these instincts also make us good at our jobs, and shouldn’t be discouraged.

So, how are organisations supposed to mitigate against the risk phishing poses?

How do you mitigate phishing risks?

Well, the truth is that mitigations require a combination of technological, process and people-based security interventions, all of which should aim to improve resilience against phishing without disrupting the productivity of users.

Intelligent phishing simulations are very effective as an awareness tool. However, used in a punitive fashion, they can start to create problems and diminish morale. They can make employees view the IT or security teams as being “out to get people”. They can negatively affect the relationship between those working in security and the rest of the organisation.

“Never punish users who are struggling to recognise phishing emails. Training should aim to improve your users’ confidence and willingness to report future incidents.”

Why it makes little sense to punish phishing victims

Going a step further, punishing those who misdiagnose simulated phishing emails can cultivate a culture of fear.

Think about that for a second:

A culture of fear.

Fear is a massive hindrance.

Fear is why a “Sam” in marketing is yet to report the fact he inadvertently introduced malware into the network last month.

Fear is why communication between IT support and the rest of the organisation, as it pertains to active security incidents, is often non-existent.

By definition, those working within a culture of fear are afraid that if they admit to clicking a link, or opening an attachment, they’ll be punished, fired or, in extreme cases, sued.

As head of Ireland’s Computer Security Incident Response Team, Brian Honan, puts it…

”Blaming a security breach on one element alone is failing to appreciate the complexity of enterprise information security. There are many elements that can fail which can cause a security breach. These can range from technical issues, to lack of policies, or to poorly trained personnel.”

“When we look at phishing attacks we very often look at the end user as being the weakest link and the point of origin of the breach. However, this can be myopic and blind us to other elements we need to consider…. If there are consistent violations of policies then this indicates either policies are not appropriate for the business needs of the organisation, hence users violate them to get their work done, or its an indication that users are not properly aware of why policies are in place.”

Why punish people for being human?

Brian Honan is a smart man.

If phishing works because it exploits the very nature of what makes humans tick, then why punish people for being human? Humans are fallible; they will make mistakes.

For reasons outlined above, punishing people can have a negative impact on your company by creating hostile environments, whereby employees are blamed or actively punished for slip-ups, ultimately reducing long-term reporting. It can undermine the trust relationship people develop with their companies – trust is important because it creates job satisfaction, employee engagement and better job performance. Research suggests punishment may also cause frustration and resentment towards security staff, with feelings of being tricked. Don’t be the enemy of your employees – work with them and empower them to detect phishing emails.

Does punishing phishing victims change behaviour?

If you need any further evidence for the case against punishing phishing victims, consider a series of experiments conducted by Nina Mazar, On Amir, and Dan Ariely.

In the experiments, the three behavioural scientists investigated rule-breaking (or, as they put it, “cheating”). Specifically, they wanted to know what might influence how much participants were willing to “cheat”.

Financial incentives, the researchers found, had little effect.

Equally, the likelihood of being caught had little effect on levels of cheating.

Crucially, increasing the severity of punishment also had little effect on levels of cheating.

The experiments suggested that increasing the severity of punishment does not prevent people from breaking the rules even when they know they are breaking the rules.

It’s almost always the case that phishing victims do not know they are “breaking the rules”. For the most part, they’re simply attempting to do their jobs well.

So can we really expect punishments to change their behaviour?

A case for discrimination?

It is also worth noting that punishing people who fall for phishing simulations is also fraught with other dangers for the organisation.

For example, certain types of phishing emails might trick people of certain genders, races and religions differently.

A phishing email might have a link to a website more of interest to men than women.

An email about particular stores or businesses might be more enticing to people of a certain income.

Certain emails might be more influential to people with certain job responsibilities.

It’s difficult to anticipate and avoid any potential demographic skewing from a simulated phishing email. People who are punished might thus object that they were unfairly targeted by a certain email — and this might open up claims of discrimination, lawsuits, negative publicity, and a Pandora’s box of legal and PR woes.

Is this worth it?

Is it ever right to punish your employees?

So, the question is: is it ever right to punish phishing victims or those who misdiagnose phishing simulations?

It’s your call, but we struggle to think of a situation in which the likely result is worth the risk.

Unless you are doing everything, (and we mean EVERYTHING), you can and should be doing to prevent phishing emails being produced and reaching your people in the first place – why would you focus on punishing the victim?

What would your legal standpoint be if you did?

Phishing mitigation alternatives

First, you need to consider the technical defences you have in place and the processes and policies you’re asking your people to follow.

Do you make it difficult for phishing emails to reach users?

Do you provide an easy way for people to check if an email is a phishing attack? (This is a two way process by the way, not just an automated reporting function where users do not receive feedback!)

Do you have protection in place to mitigate the effects of an attack?

Do you have the ability to respond to incidents quickly?

All of these considerations are covered by the NCSC in an excellent, comprehensive guide available here.

Why are your people falling for phishing attacks?

Next, you need to try to understand why your people are falling for phishing attacks in the first place. You might do this by sending carefully constructed emails in a scientific manner which measure the effectiveness of various influencing factors (something CybSafe does automatically) or by surveying staff at the point of failure and asking them why they did what they did.

This understanding will allow you to identify where gaps exist between expectation and understanding, so that you can direct tailored training to the individuals in your organisation that need it the most. Take this scenario for example;

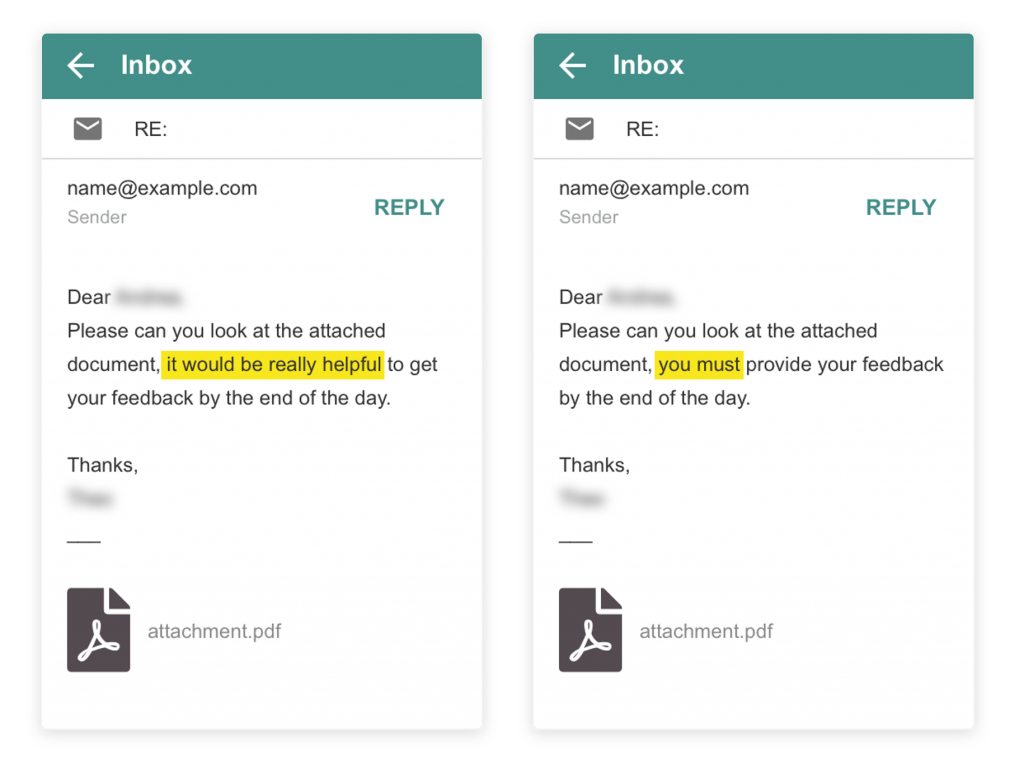

It is identified that users within the finance department are overly susceptible to legal category phishing emails that use authoritative language and evoke panic. This allows training and a supporting poster campaign to be undertaken in order to clarify what to do when faced with a legal request, what managers will and will not ask users to do, and how they will go about doing so (ie. ‘We will never use language that is likely to make you panic’).

If dangerous behaviours do not improve after a period of time, say three or six months, you might consider limiting access to critical data as a preventative measure.

Of course, you accept that this might also impede their ability to fully execute their jobs. But the truth is that if you don’t see an improvement over that period of time, there are likely to be other issues with behaviour or ability that might require a different kind of approach beyond training.

The NCSC covers this and the consequences of blaming users nicely here.

At CybSafe, we are equally committed to providing organisations with measurements, metrics, indicators and insights around their human cyber risk and also studying the science of cyber security. We’re excited that soon we’ll be able to lift the cover properly on some research funding we’ve received to explore this very question: What is the impact of simulated phishing emails and punishment on employee awareness and work-based outcomes such as productivity and trust?

Sadly, a tick-box approach doesn’t work

To be clear – we’re not saying that you shouldn’t train your people or that you shouldn’t expect this training to have an impact. If you take an intelligent and scientific approach both are achievable.

But the bottom line is: a tick-box approach to training doesn’t work.

Besides, the human aspect of cyber security is too important. It requires an intelligent, scientific, data-driven approach. And this high bar should be set for your awareness training, phishing simulations and all other behaviour change interventions.

The CybSafe platform offers just that and, importantly, demonstrably reduces your risk.

Would you like to know more? Just ask.